I made an SVG generation tool

Fun side project

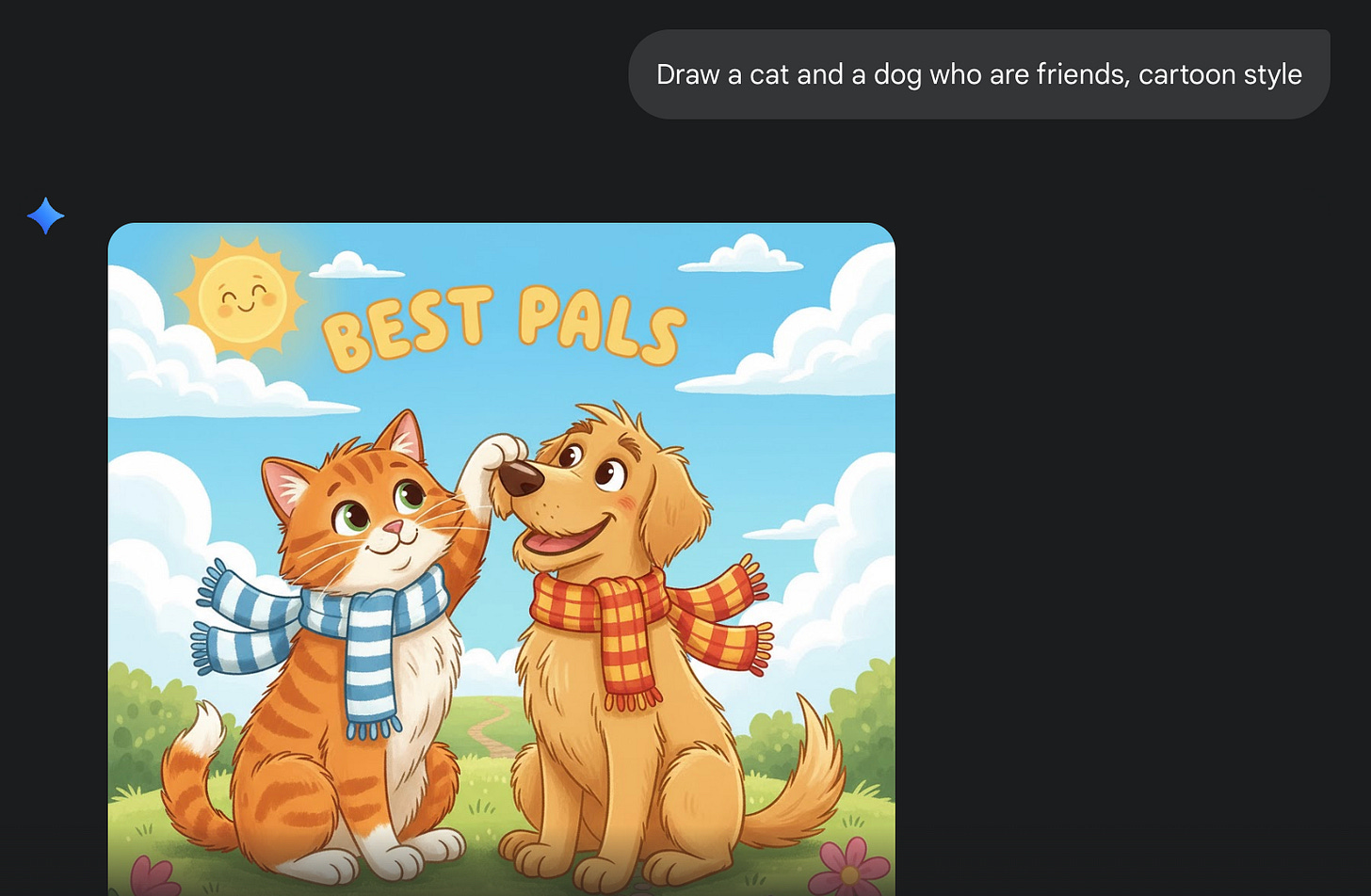

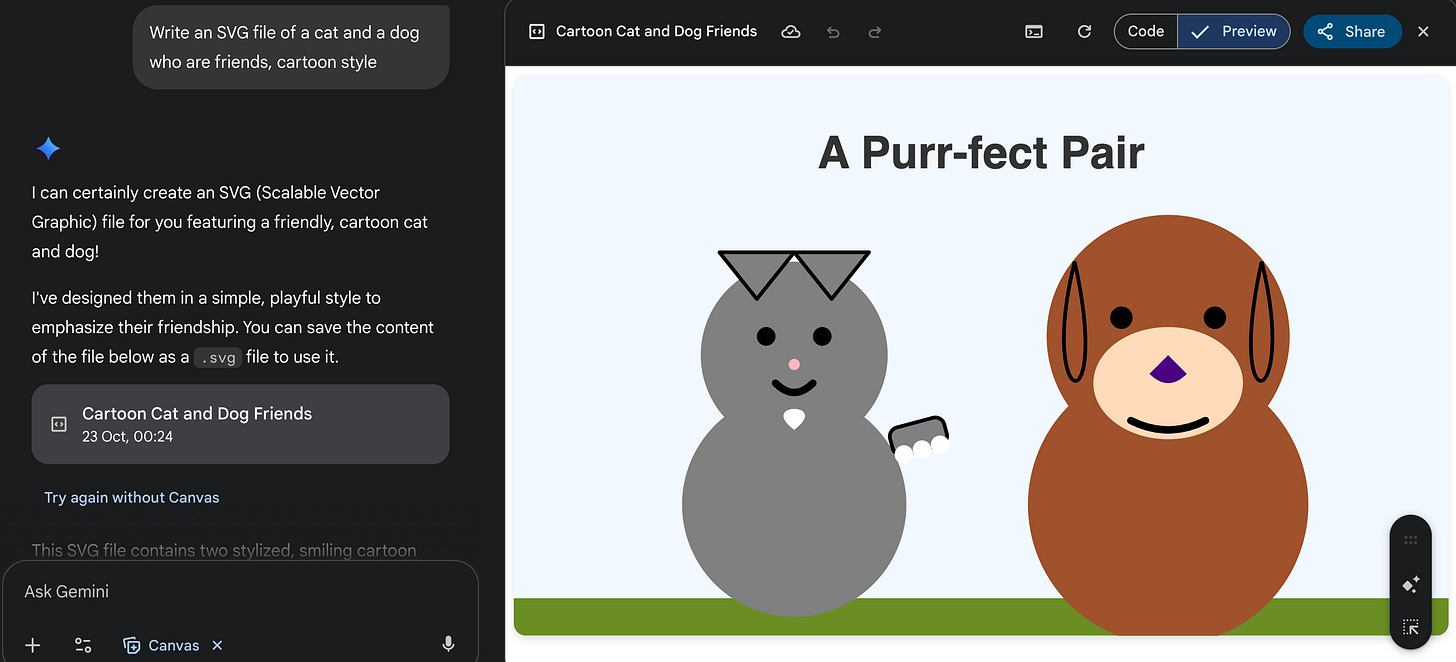

Image-generation models are getting very good. But we’re not yet at the point of automating graphic design—why? A major reason is that 2D design assets often benefit from being defined in terms of vectors rather than pixels. This way they are infinitely scalable without losing definition, and can be easily edited, animated, and recolored. This is where the SVG (Scalable Vector Graphics) file format comes in. Unlike raster images (like PNG or JPEG) that are made up of pixels, SVGs are defined using spline curves and polygons. However, out of the box, LLMs are very poor at outputting SVGs. Models like ChatGPT and Gemini can produce very advanced image outputs but their SVG outputs are of comparatively much lower quality.

The other day I bought the domain scalablevector.graphics and built a simple service that pipes together a few different tools to generate SVG web graphics. I’ve tried to make something like this a couple times before but it’s failed due to the underlying generative image model being poor at instruction-following. Finally, this is no longer a blocker, and I’m closer to my dream of ridding the internet of blurry graphics and logos.

How does it work? I might open-source the code soon but the pipeline is pretty simple:

Generate image using Gemini 2.5 Flash with a custom prompt

Do some post-processing and cleanup in Python / numpy

Use some open-source SVG path tracing tools

Use some open-source SVG optimization tools

Final step of color tweaking in Python

Upvote my tool on Product Hunt if you find it useful!

I recall that around Feb/March 2024 anthrupad was quite active in producing LLM-generated svg animations, such as https://x.com/anthrupad/status/1770948782886355293?s=20.